Digital stage technology from augmented reality to AI-based camera tracking systems

Digital technologies have been an integral part of our theatre work since 2019. We see ourselves as pioneers in the fields of augmented reality and artificial intelligence – a competence that we intend to continuously deepen and expand in the future, thereby contributing to Berlin’s future as an internationally visible laboratory for music and dance theatre.

‘We started in 2019, on the anniversary of the Bauhaus, when we were asked to work with the most advanced technology in theatre. At that time, we visualised abstract forms and historical images and related them to performers in ‘Verrat der Bilder’ (Betrayal of Images). That was our first encounter with this technology, on which the AR Loop Machine is now based. However, we soon realised that real people must not disappear behind the technology, which led to the form we are now presenting. An important part of the process was the ability to integrate motion data using motion capture suits, for which the coronavirus shutdown gave us extra development time. However, AR glasses remain an indispensable tool for such productions. All other projections in the room, often mistakenly referred to as holograms, are based on two-dimensional tricks (Pepper’s ghost from 1862). Documentaries of such illusions create expectations that cannot be fulfilled in reality, or only with glasses technology.’ [Oliver Proske in conversation with Thomas Irmer | Theater der Zeit 9/2022]

Since 2022, we have been working with Prof. Dr.-Ing. Steffen Borchers Tigasson (HTW Berlin) on the development of an AI-based camera tracking system. The aim is that in future, performers will no longer have to adapt to the technology, but that the technology will adapt intelligently and flexibly to their movements. This should open up new artistic possibilities for expression on stage and make work processes more efficient in the long term. In the course of the cooperation, we have already developed our own trilateration algorithm that is specifically tailored to the requirements of our theatre operations. Through the targeted analysis of theoretical errors, this enables significantly higher positional accuracy. In a further project phase, the aim is to combine the AI-based camera tracking system with a Decawave-supported positioning system and implement AI-based sensor fusion. This should enable a significant technological leap forward and realise the long-standing goal of a precise, automated performer tracking solution on stage.

The camera stays on automatically

[Campus Stories Online Magazine of HTW Berlin | Gisela Hüttinger | 2023]

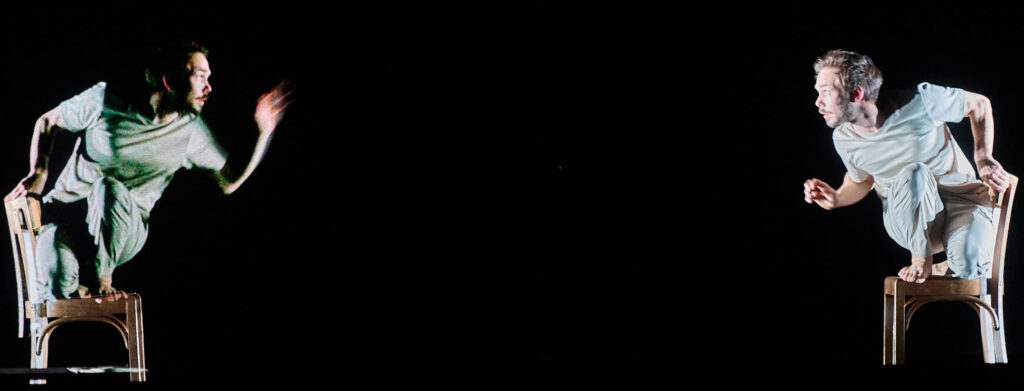

The people on stage move quickly, jump, disappear, and return. Their facial expressions and many of their gestures would remain hidden were it not for the moving close-ups of faces and bodies on the screen behind the stage. This allows even the audience in the very back row to see anger, resignation or hope. This is not uncommon for the Berlin-based company Nico and the Navigators. The award-winning troupe is known for integrating digital components into its performances. Because it wants to further develop its artistic language, it asked Prof. Dr.-Ing. Steffen Borchers-Tigasson from the Electrical Engineering programme at HTW Berlin for support. The expert in automation and artificial intelligence (AI) is now further developing the camera technology based on AI.

‘Technologically feasible for only a few years’

When the request came, Prof. Dr.-Ing. Borchers-Tigasson did not hesitate for a second. He had already attended private performances by ‘Nico and the Navigators’, including those with video projections. ‘The company is incredibly tech-savvy,’ he says. He was immediately interested in the task of developing automatic, AI-based camera tracking. ‘Technologically, this has only been possible for a few years,’ says the scientist. At the same time, he says, he really enjoys giving artists a new tool and opening up new possibilities for them.

More freedom for dancers and actors

Giving the dancers and actors on stage more freedom was exactly what client Oliver Proske wanted. He founded ‘Nico and the Navigators’ with Nicola Hümpel 25 years ago. As a producer and managing director, the trained industrial designer and stage designer is responsible for the digital stage, the concept and the appropriate technology in many productions. He finds this technology too static. ‘It restricts the artists,’ he believes. This is because the cameras have to be preset to the second and all performers have to be at exactly defined points on stage to enable projection-ready close-ups. ‘To become more flexible, we experimented with a sensor system ourselves for a long time,’ says Proske. But the aesthetic results were so unsatisfactory that they sought expertise.

An intelligent system is needed

The requirement is for an intelligent camera system that automatically follows artists on stage with complete flexibility, regardless of how fast they move, and produces images that can be selected by the camera control team for projection in real time. ‘The technical requirements are high,’ admits Prof. Dr.-Ing. Borchers-Tigasson.

Recognising and following people

Firstly, the camera has to be mobile and able to zoom in and out independently. Secondly, it must not only transmit image data in the form of pixels. Rather, it has to recognise people, follow them reliably and, in case of doubt, track them down again if they leave the stage briefly and return later, which happens regularly in dance and theatre. And if two cameras are pointed at the same scene, the system has to distinguish where the same person is acting. This is easy for the human eye or brain thanks to hair colour, body size or other characteristics. The camera has a much harder job. It has to perform a kind of face recognition. ‘This is exactly where artificial intelligence comes into play,‘ says Prof. Dr.-Ing. Borchers-Tigasson.

’But nobody wants a robot camera”

This raises the question of whether such an intelligent system will make humans behind the camera redundant in the medium term. Quite the contrary, everyone involved is convinced. “Nobody wants a robot camera,” says Oliver Proske. Instead, the technology would give rise to completely new forms of artistic expression.

This is exactly what Nico and the Navigators are interested in.

Artistically sophisticated camera direction

The scenario in which the new technology is used can be imagined as follows: During the performance, the camera operator receives real-time images from the cameras positioned in the room. She can change their settings using a so-called ‘interface’, i.e. an interface. The camerawoman then decides which actors to focus on, whether the respective camera should zoom in, from which angle to film and which images to show behind the stage. ‘This is a creative and demanding process that places higher demands on the camerawoman while at the same time giving her more artistic freedom,’ says Prof. Dr.-Ing. Borchers-Tigasson, referring to ‘camera direction’.

Electrical engineering students were also involved

The scientist developed the intelligent camera system step by step in collaboration with the company. Electrical engineering students were also involved in the initial phase with their final theses and project work. ‘It wasn’t difficult at all to spark the students’ interest,’ says the university lecturer happily. In autumn, he accompanied the company to their rehearsal rooms and adapted the technology to the environment and the plot.

Practical test in December at Berlin’s Radialsystem

In December 2023, the intelligent camera system will be tested in a real environment for the first time when the play ‘sweet surrogates’ is revived. If everything goes well, it will also be used for the performance at Berlin’s Radialsystem, where ‘Nico and the Navigators’ perform regularly. Prof. Dr.-Ing. Borchers-Tigasson will not be sitting in the audience, of course, but will be backstage, stage fright included.

From my first experience, I would say that this is indeed something that could have a future in theatre. What are the perspectives?

To speak with Walter Benjamin: One task of art is to create a demand that the time has not yet come to fully satisfy. As artists, we can only present our work; its effect must be evaluated by the viewers and critics. As I said, “rendering life” is about trying out technological possibilities in a way that is adequate in terms of content.

Oliver Proske of NICO AND THE NAVIGATORS in conversation with Thomas Irmer

After Jonas Zipf's essay in the first part of the June issue focused on the entire theatre operating system, the following pages deal with new technological applications: The virtual extension of the stage space, an exchange about the future of theatre criticism and, for the first time, the printing of a play text written by an AI.

With the software you developed, you experience a hybrid space in which artificial elements combine with a real performance. The principle is based on augmented reality glasses, which are often used in theatre, but expands the spectrum. What is it exactly?

You could say that we create an immaterial dreamscape in which what has already happened is superimposed on current events: real performers are confronted with imaginary images, which will certainly open up new possibilities for storytelling in the future - as a continuation of virtual reality, which has so far mainly created a cinematic illusion. With the technology we use, it becomes a live spatial encounter of actually existing bodies with moving virtual elements that could previously only be imagined in their three-dimensionality.

In concrete terms, it is a kind of manikin that moves together with the real dancers in your production "You have to render your life!

These animated figures were first animated by the two performers themselves with their movements, which were taken off by data suits. The dancers are thus in dialogue with their own images, but only the audience with the glasses perceives this. In the rehearsal process, the actors must therefore rehearse the movements that exactly match their counterparts - the choreography is synchronised quite classically via the music. We have the possibility to let the figurines appear in any size and number, i.e. in the most diverse forms - and thus to show the harmony as well as the conflict between creator and creature. Of course, this arouses a wealth of associations - from Genesis to Pygmalion and Frankenstein to current debates on the opportunities and risks of artificial intelligence.

Who designs this?

The dancer and choreographer Yui Kawaguchi has been working with NICO AND THE NAVIGATORS for years, and has now also discovered digital media as an extension of her creative expression. She developed this with the two dancers, recorded their movements and guided the rehearsal process. Nicola Hümpel came on board as a director in an advisory capacity. Technically, you have to know that we developed the software independently of the choreography that is now designed with it, which on the one hand makes things much more complicated. On the other hand, however, this creates a technology with open possibilities that can be used intuitively - the system is also to be used in the future for other, completely different projects.

So it's no coincidence that you started with dance, that you're not developing text-based theatre for it.

We started in 2019 for the Bauhaus anniversary, when we were asked to work with the most advanced technology in theatre. At that time, we visualised abstract forms and historical images and related them to performers in "Betrayal of Images". That's how we first came into contact with this technology, on which the AR loop machine is now based. However, it soon became clear to us that the real person must not disappear behind the technology, which is how the form now presented developed.

An important part of the process was precisely the possibility of integrating motion data with the motion-capture suits, for which the Corona standstill in particular gave us development time. However, the AR glasses remain the indispensable instrument for such productions.

All other projections in space, which are often wrongly called holograms, are based on two-dimensional tricks ("Pepper's ghost" from 1862). Documentations of such illusions give rise to expectations that cannot be fulfilled in reality, or only with the technology of glasses.

From my first experience, I would say that this is indeed something that could have a future in theatre. What are the perspectives?

To speak with Walter Benjamin: One task of art is to create a demand that the time has not yet come to fully satisfy. As artists, we can only present our work; its effect must be evaluated by the viewers and critics. As I said, "rendering life" is about trying out technological possibilities in a way that is adequate in terms of content.

Tickets for this date are not available yet. Leave your mail adress to get notified when tickets are available.